Algorithms to Live By

Introduction¶

Long before algorithms were ever used by machines, they were used by people.

- Algorithm (Origin ~ al-Khwarizmi, persian mathematician)

1. Optimal Stopping¶

When to Stop Looking.

The Apartment Hunter's Dilemma¶

- Imagine you are searching for an apartment.

- The booming techg sector and tight zoning laws limiting new construction have conspired to make the city just as expensive as any other big city.

- New listings go up and come down in minutes.

- This creates a new problem, you can take the apartment you are currently looking at, forsaking all others, or you can walk away, never to return.

- How do you make a satisfying decision for an apartment to choose?

Getting a Baseline¶

- You will need a baseline to judge the current apartment.

- To get the baseline you will need to go through a certain amount of apartments to get an estimate i.e. our baseline.

- The more information you gather, the better you will know the right opportunity when you see it - but the more likely you are to have already passed it by.

- So what do you do? How do you make informed choices when the very act of informing it jeopardizes the outcome?

Looking and Leaping¶

- How long to look for and when to leap at an option.

- The Answer : 37%

- If you want the best odds of getting the best apartment, spend 37% of your apartment hunt without committing, exploring options.

- After the 37% of research is done, now you form a baseline based on what you have already seen.

- Now, be ready to commit to the first place that beats whatever you have already seen.

The Secretary Problem¶

- "In any optimal stopping problem, the crucial dilemma is not which option to pick, but how many options to even consider."

- Problem Statement

- Imagine you are interviewing a set of applicants for the position of a secretary.

- Your goal is to maximise the chance of hiring the single best applicant in the pool.

- You interview applicants in random order, one at a time.

- You can decide to offer job to an applicant at any point and they are guaranteed to accept, terminating the search.

- If you pass over an applicant, they are gone forever.

- Similar to the 'Apartment Hunter's dilemma'

- We don’t have an objective or preexisting sense of what makes for a good or a bad applicant; moreover, when we compare two of them, we know which of the two is better, but not by how much.

- It’s this fact that gives rise to the unavoidable “look” phase in which we risk passing a superb early applicant while we calibrate our expectations and standards.

- These types of problems are referred as no-information games

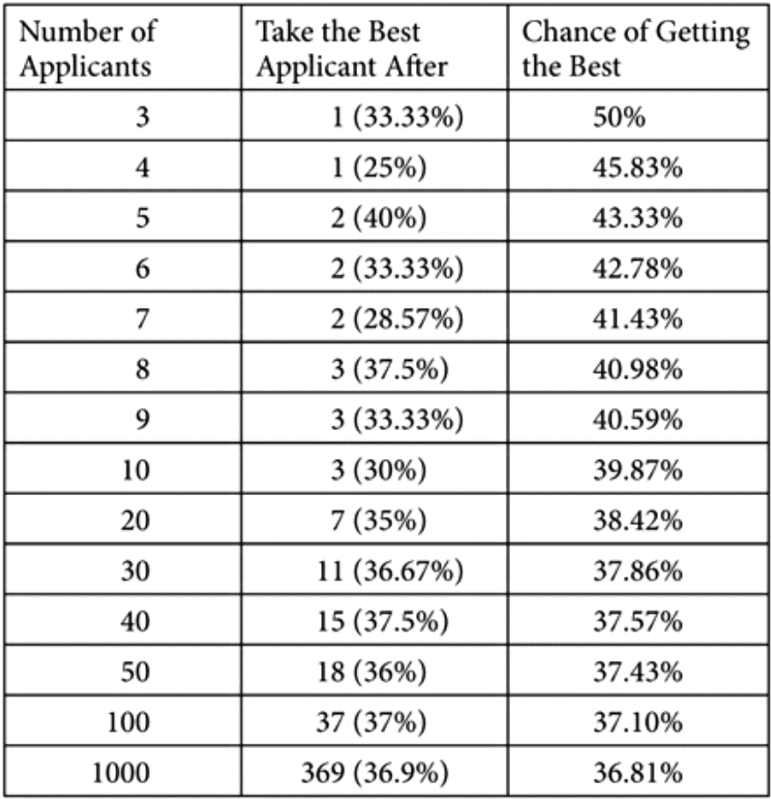

How to optimally choose a secretary

How to optimally choose a secretary

Intuition / Thoughts¶

- "In your search for a secretary, there are two ways you can fail: stopping early and stopping late"

- Stopping early leaves the best applicant left undiscovered

- Stopping late ends up in you holding out for a better applicant that does not exist.

- If your aim is to find the best applicant, it's clear that as you go through interviews, you shouldn't consider hiring someone who is not the best so far.

- Simply being best till now is not enough, the first candidate will always win then.

- the rate at which we encounter “best yet” applicants will go down as we proceed in our interviews."

- the second applicant has a 50/50 chance of being the best we’ve yet seen, but the fifth applicant only has a 1-in-5 chance of being the best so far.

- Best yet results will become steadily more impressive as the search continues, as the probability to get the best yet will decrease with every new candidate.

- But on the other hand, the will become more and more infrequent.

- So, we know taking the first best-yet applicant we encounter is a bad idea.

- So when should we stop such that we have about the best candidate, without risking losing them by Stopping late.

Look-Then-Leap Rule¶

- Set a predetermined amount of time for “looking”—that is, exploring your options, gathering data—in which you categorically don’t choose anyone, no matter how impressive

- After that point, you enter the “leap” phase, prepared to instantly commit to anyone who outshines the best applicant you saw in the look phase

- As the applicant pool grows, the exact place to draw the line between looking and leaping settles to 37% of the pool, yielding the 37% Rule.

37% Rule¶

- look at the first 37% of the applicants,* choosing none, then be ready to leap for anyone better than all those you’ve seen so far

- Here, having 63% failure rate, when following the best possible strategy, is a sobering fact.

- Even when we act optimally in the secretary problem, we will still fail most of the time—that is, we won’t end up with the single best applicant in the pool.

- remarkably, the math of the secretary problem doesn’t change. If you’re stopping optimally, your chance of finding the single best applicant in a pool of a hundred is 37% and in a pool of a million, believe it or not, your chance is still 37%

Flexibility in 37% Rule¶

- it can be applied to either the number of applicants or the time over which one is searching

Example : Renting an Apartment¶

- Let's say you got 14 days to look for a new rental apartment.

- Spend 37% of that time deciding the baseline.

- You will end up spending 4.5 days approx setting a benchmark, the first apartment you find better that the benchmark, you cash in

Example: Finding Love¶

- Let's say you want to date seriously, but do not know your type, how do you know when to commit and when to just sail the seas?

- Take the time span as a metric, Let's say you are 21 years old, and plan to marry by 28, you are new to dating game.

- You should spend 37% of the 7 years you have setting a benchmark, exploring, keeping your options open.

- After the 2 years and 7 months of hopping around you should decide your benchmark based on the best partner you thought you had.

- Now, anyone you find who seems to be better than your benchmark, you should commit fully!

Full Information¶

- Knowing a Good thing when you see it, that is the context of this problem.

- look noncommittally for a time, then be ready to leap.

- Full information means we don't have to look before we leap.

-

Threshold Rule: we immediately accept an applicant if she is above a certain percentile.

- We don't need to look at an initial number of candidates to set this threshold, instead we need to be keenly aware of how much looking remains available.

Gold digging is more likely to succeed than a quest for love

- We don't need to look at an initial number of candidates to set this threshold, instead we need to be keenly aware of how much looking remains available.

-

If you are evaluating your partner based on a kind of objective criterion - their income percentile - you've got a lot more information at your disposal than if you are after a nebulous emotional response "love" that might require both comparison and experience to calibrate.

When to use the Full information Rule?¶

- Any yardstick that provides full information on where an applicant stands relative to the population at large will change the solution from the Look-Then-Leap Rule to the Threshold Rule

- This will dramatically boost your chances of finding the single best applicant in the group.

When to Sell¶

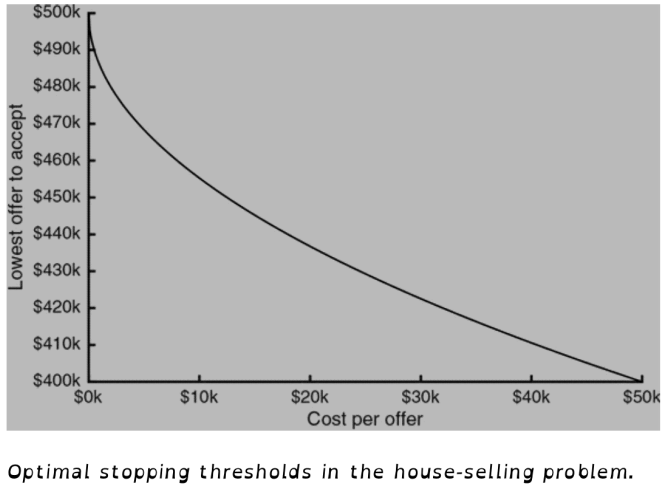

- Selling a house is similar to the #Full Information game.

- Our goal is not to secure the single best offer - it's to make the most money through the process overall.

- waiting has a cost measured in dollars, a good offer today beats a slightly better one several months from now

Sample Case¶

- one of the simplest cases: where we know for certain the price range in which offers will come, and where all offers within that range are equally likely.

- This result cares only about the difference between the highest and lowest offers you’re likely to receive.

- if waiting costs half or more of our expected range of offers—in this case, $50,000—then there’s no advantage whatsoever to holding out; we’ll do best by taking the very first offer that comes along and calling it done

- This principle applies to any situation where you get a series of offers and pay a cost to seek or wait for the next.

- In house selling and job hunting, even if it’s possible to reconsider an earlier offer, and even if that offer is guaranteed to still be on the table, you should nonetheless never do so.

- If it wasn't above your threshold then, it won't be above your threshold now.

What you’ve paid to keep searching is a sunk cost. Don’t compromise, don’t second-guess.

- If it wasn't above your threshold then, it won't be above your threshold now.

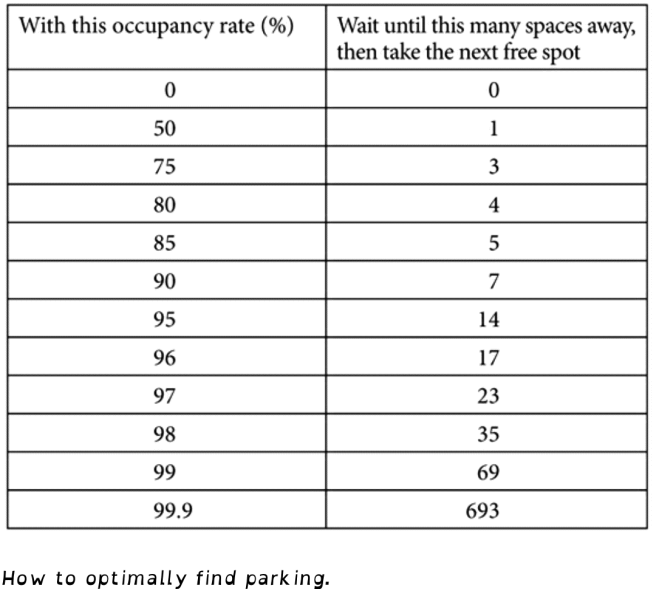

When to Park¶

"the three major administrative problems on a campus are sex for students, athletics for alumni and parking for faculty" - Clark Kerr, President, UC Berkeley, 1958-1967

- As for planning on ‘unexpected traffic,’ you should plan on expected traffic

- ~ Shoup, The High Cost of Free Parking

- If the cost of parking in a particular location is too low (or—horrors!—nothing at all), then there is a high incentive to park there, rather to park a little farther away and walk.

The Big Decision¶

- When you come across an empty parking spot along the street you have to make a decision.

- should you take this spot, or go a little closer to your destination and try your luck?

- The Solution: #Look-Then-Leap Rule

- Optimally stopping driver should pass up all the vacant spots occurring more than a certain distance from the destination and then take the first space that appears thereafter.

- the distance at which to switch from looking to leaping depends on the proportion of spots that are likely to be filled—the occupancy rate

How to optimally find parking

How to optimally find parking

A policy reminder to municipal governments¶

- parking is not as simple as having a resource (spots) and maximising its utilisation (occupancy).

- Parking is also a process—an optimal stopping problem—and it’s one that consumes attention, time, and fuel, and generates both pollution and congestion

When to Quit¶

- Is there a way "perhaps, to think mathematically about the advice to “quit while you’re ahead

- Surprisingly, not giving up—ever—also makes an appearance in the optimal stopping literature.

- there are sequential decision-making problems for which there is no optimal stopping.

- Example, the game of “triple or nothing.” Imagine you have $1.00, and can play the following game as many times as you want: bet all your money, and have a 50% chance of receiving triple the amount and a 50% chance of losing your entire stake.

Some problems are better avoided than solved.

- Example, the game of “triple or nothing.” Imagine you have $1.00, and can play the following game as many times as you want: bet all your money, and have a 50% chance of receiving triple the amount and a 50% chance of losing your entire stake.

Always be Stopping¶

- Whether it involves secretaries, fiancé(e)s, or apartments, life is full of optimal stopping.

- people tend to stop early, leaving better applicants unseen.

- In a study over #The Secretary Problem, most people acted in a way that was consistent with the Look-Then-Leap Rule, but they leapt sooner than they should have more than four-fifths of the time.

- another consideration that isn’t taken into account in the classical secretary problem: the role of time.

- After all, the whole time you’re searching for a secretary, you don’t have a secretary.

- You are spending the day conducting interviews instead of getting your own work done.

- After searching for a while, we humans just tend to get bored.

- the flow of time turns all decision-making into optimal stopping.

- The secretary problem’s most fundamental yet most unbelievable assumption—its strict seriality, its inexorable one-way march—is revealed to be the nature of time itself.

- Intuitively, we think that rational decision making means exhaustively enumerating our options, weighing each one carefully , and then selecting the bst.

- But in practice, when the clock—or the ticker—is ticking, few aspects of decision-making (or of thinking more generally) are as important as one: when to stop

2. Explore / Exploit¶

- Do we try new things or stick with our favourite ones?

- We intuitively understand that life is a balance between novelty and tradition, between the latest and the greatest, between taking risks and savoring what we know and love.

- Exploration is gathering information, and exploitation is using the information you have to get a known good result.

- exploration can be a curse.

- Too much exploration can annoy you, and leave you stranded without anything to exploit or stick to at all.

- when you constantly have to explore the new you can never enjoy the fruits of your connoisseurship—a particular kind of hell.

Journalists are martyrs, exploring so that others may exploit.

The Multi Armed Bandit Problem¶

- The name comes from the colloquial(informal) term for a casino slot machine, the "one armed bandit"

- Imagine, you walk in a casino, there are multiple slot machines.

- Each of these machines have their own odds of a payoff.

- The rub is that you aren't told those odds in advance; you won't have any idea which machines are most lucrative and which are money sinks.

- Your goal : To maximize your total winnings.

- To achieve this goal there will be two steps:

- Exploring: Pulling arms of different machines to test them out

- Exploiting: Favouring the most promising machines you have found.

Problem's Subtleties¶

- To get a sense for the problem’s subtleties, imagine being faced with only two machines.

- One you’ve played a total of 15 times; 9 times it paid out, and 6 times it didn’t.

- The other you’ve played only twice, and it once paid out and once did not. Which is more promising?

- Simply dividing the wins by losses, we get first machine to have a win rate of 60% and 50% for the second machine.

- But there's more than that, 2 pulls might not be good enough and there might be more to the second machine that what seems as of now.

-

We don't know yet how good the second machine is.

-

This brings up the question Which of the two arms should you pull?

- The answer depends on something we haven't discussed yet, "how long do you plan to be in the casino?"

Use-cases¶

- Choosing a restaurant or an album is, in effect, a matter of deciding which arm to pull in life’s casino.

- What is the quickest way to determine which compound is likely to be effective against a disease?

- Multiple options to pursue, different probability of reward for each option, a certain amount of effort (money, time) to be allocated among them.

- #The Online Bandits

- # A better way to do Clinical Trials

Where does this come handy?¶

- Explore-exploit trade-off provides fundamental insights into how our goals should change as we age, and why the most rational course of action is not always trying to choose the best.

- If you’re thinking not just about the next decision, but about all the decisions you are going to make about the same options in the future, the explore/exploit tradeoff is crucial to the process.

Seize the Interval¶

- When balancing favourite experiences and new ones, nothing matters as much as the interval over which we plan to enjoy them.

- You are more likely try a new restaurant when you move to a city than when you are leaving it.

- The value of exploration, of finding a new favourite, can only go down over time, as the remaining opportunities to savor it dwindle

- The value of exploitation can only go up over time.

- The best book you know today, is at least as good as the best book you loved last month.

- So, explore when you will have time to use the resulting knowledge, exploit when you’re ready to cash in.

Win-stay Strategy¶

- The Win-Stay, Lose-Shift algorithm: choose an arm at random, and keep pulling it as long as it keeps paying off.

- Even though the above strategy is far from being the complete solution, it performs reliably better than chance.

- Win-stay turns out to be an element of the optimal strategy for balancing exploration and exploitation

- lose-shift is another story.

- Changing arms each time one fails is a pretty rash move. Imagine going to a restaurant a hundred times, each time having a wonderful meal.

- Would one disappointment be enough to induce you to give up on it?

- Good options shouldn't be penalized too strongly for being imperfect.

- Moreover, this Win-stay Lose-shift method doesn't have any notion of time interval over which you are optimizing.

- This strategy doesn't care if it's your last day in the city or the first.

Discounting¶

- Companies want to be around theoretically forever, and on the medical side a breakthrough could go help people who are not even born yet.

- The present holds higher priority: a cured patient today is more valuable than one cured a week or a year from now.

- Same logic applies to profit.

- Economists refer to this idea of valuing the present more highly than the future, as "discounting".

The Gittins Index¶

- John Gittins was Hired by Unilever to optimize some of their drug trials.

- Problem statement as in #Use-cases.

- Gittins conceived the goal as maximizing payoffs not for a fixed interval of time, but for a future that is endless yet discounted.

- He made the assumption that the value assigned to payoffs decreases geometrically.

- Each restaurant visit you make is worth a constant fraction of the last one.

- Let's say you assume a 1% chance that you might be hit by a bus any given day, then you should value tomorrow's dinner at 99% value of today's dinner.

-

For every slot machine we know little or nothing about, there is some guaranteed payout rate which, if offered to us in lieu of that machine, will make us quite content never to pull its handle again

- This number suggest an obvious strategy on the casino floor: always play the arm with the highest Index.

- Gittins called it the "dynamic allocation index", it later came to be known as Gittins Index.

-

The Gittins index provides a formal, rigorous justification of preferring the unknown, provided we have opportunities to exploit the results of exploration.

- "Grass is greener on the other side of the fence", heard the saying?

- Mathematically, the unknown has chance of being better, even if we actually expect it to be no different, or if it's just as likely to be worse.

- The untested rookie is worth more, than the veteran of seemingly equal ability, precisely because we know less about him.

- Exploration in itself has value, since trying new things increase our chances of finding the best.

Limitations of Gittins Index¶

- Gittins index provides an amazingly straightforward solution to multi-armed bandit problem.

- But,

- The Gittins Index is optimal only under strong assumptions.

- It's based on Geometric discounting of future reward, value each pull at a constant fraction of the previous one.

- If there is a cost to switching among options, the Gittins strategy is no longer optimal.

Regret and Optimism¶

“To try and fail is at least to learn; to fail to try is to suffer the inestimable loss of what might have been.”

Confidence Intervals¶

- How confident are you when it comes to trusting a way/option?

- This value is defined by statistics indicating uncertainty in the measurement.

- A range of values, calculated from sample data, that is likely to contain the true value of an unknown population parameter

Regret¶

- Computer Science cannot offer you a life with no regret, but it can, potentially offer you a life with minimal regret.

- Assuming you are omniscient, your total amount of regret will never stop increasing, even if you pick the best possible strategy.

- Even the best strategy is not perfect everytime.

- Regret will increase at a slower rate if you pick the best strategy instead of others.

- Minimum possible regret, is regret that increases at logarithmic rate with every pull of the handle.

- We cannot expect someday to never have any more regrets.

Regret Minimization Framework¶

- by Jeff Bezos

- I would project myself forward to age 80 and say, "Now that I am looking back at my life, I want to have minimized the number of regrets I have."

- If not doing something would make you regret not doing it in the future, it is a big sign that you should spend time to do it now.

Upper Confidence Bound Algorithms¶

- Algorithms occurring guarantee of minimal regret.

- Like the Gittins Index, these algorithms assign a single number to each arm of the multi-armed bandit.

- This number is set to the highest value that the arm could reasonably have, based on the information so far.

- The upper confidence bound algorithm doesn't care which arm has performed best so far; instead it chooses the arm that could reasonably perform best in the future.

- These algorithms implement a principle dubbed from "optimism in the face of uncertainty".

The Online Bandits¶

- The Bandit Machines are now present everywhere online!

-

Different users see different thumbnails and based on the click through rate a thumbnail to show to mass audience is decided.

- This is known as A/B Testing.

If you’ve used the Internet basically at all over the past decade, then you’ve been a part of someone else’s explore/exploit problem

- This is known as A/B Testing.

-

Companies A/B test their site navigation, the subject lines and timing of their marketing emails, and sometimes even their actual features and pricing

- Next time you open your browser, you can be sure that the colors, images, text, perhaps even the prices you see—and certainly the ads—have come from an explore/exploit algorithm, tuning itself to your clicks.

A better way to do Clinical Trials¶

Avoiding harm requires learning what is harmful; and in the process of obtaining the information, persons may be exposed to risk of harm.

Adaptive Trials¶

- Proposed by Marvin Zelen, biostatistician.

- Uses randomized, "play the winner" algorithm.

- A version of win-stay, lose-shift, in which thee chance of using a given treatment is increased by each win and decreased by each loss.

Procedure¶

- Start with a hat containing one ball each of the two treatment options being studied.

- The treatment for the first patient is selected by drawing a ball at random from the hat (put the ball back in the hat).

- If the chosen treatment is a success,

- You put another ball for that treatment in the hat.

- If the chosen treatment fails,

- You put another ball for the failed treatment into the hat, making it more likely you'll choose the alternative.

The Restless World¶

- Once you become familiar with them, it’s easy to see multi-armed bandits just about everywhere we turn.

- In general, it seems that people tend to over-explore—to favor the new disproportionately over the best.

- A study conducted where passengers were given a choice between two options, one with a known payoff chance and one unknown.

- Specifically, two airlines. An established carrier with a known on time rate, and a new company without a track record.

- Given the goal of maximizing the number of on-time arrivals over some period of time, the mathematically optimal strategy is to initially only fly the new airline, as long as the established one isn’t clearly better.

- Given the goal of maximizing the number of on-time arrivals over some period of time, the mathematically optimal strategy is to initially only fly the new airline, as long as the established one isn’t clearly better.

-

while we tend to commit to a new secretary too soon, it seems like we tend to stop trying new airlines too late.

To live in a restless world requires a certain restlessness in oneself.

-

So long as things continue to change, you must never fully cease exploring

Explore¶

- When you were a child you were not good at tying your shoelace, long term planning, or focused attention.

- But pressing buttons at random, being very interested in new toys, and jumping quickly from one thing to another are all things that you were really great at.

- And those are the exact things you would have been doing if your goal was exploration.

- Our intuitions about rationality are too often informed by exploitation rather than exploration.

- If you treat every decision as if it were your last, then indeed only exploitation makes sense.

- over a lifetime, you’re going to make a lot of decisions. And it’s actually rational to emphasize exploration

- The new, rather than the best. The exciting rather than the safe!

And Exploit..¶

- I had reached a juncture in my reading life that is familiar to those who have been there: in the allotted time left to me on earth, should I read more and more new books, or should I cease with that vain consumption—vain because it is endless—and begin to reread those books that had given me the intensest pleasure in my past.

- ~ Lydia Davis

- the size of people’s social networks (that is, the number of social relationships they engage in) almost invariably decreases over time

- Laura Carstensen, a professor of psychology has argued that

- 'the elderly have fewer social relationships by choice.'

- The result of lifelong selection processes by which people strategically and adaptively cultivate their social networks to maximize social and emotional gains and minimize social and emotional risks.

- old age is a time of exploitation helps provide new perspectives on some of the classic phenomena of aging.

- See it as, when you are young, you explored relationships, people and had a lot of people around you. But as you grew up you focused more on exploiting the best you had instead of exploring more and more relationships.

How to take the advice from your elders¶

- The explore/exploit tradeoff also tells us how to think about advice from our elders.

-

Perhaps the deepest insight that comes from thinking about later life as a chance to exploit knowledge acquired over decades is this: life should get better over time.

What an explorer trades off for knowledge is pleasure.

-

Shifting the bulk of one's attention to one's favourite things should increase quality of life.

- Carstensen has found that older people are generally more satisfied with their social networks, and often report levels of emotional well being that are higher than those of younger adults.

- This is another incentive, to explore and find things you like, to exploit and settle on!

3. Sorting¶

-

one of the main reasons things get sorted is to be shown in useful form to human eyes, which means that sorting is also key to the human experience of information.

We refer to things like Google and Bing as “search engines,” but that is something of a misnomer: they’re really sort engines

-

The truncated top of an immense, sorted list is in many ways the universal user interface

The Agony of Sorting¶

- With sorting, size is a recipe for disaster: perversely, as a sort grows larger, “the unit cost of sorting, instead of falling, rises.”

- The first and most fundamental insight of sorting theory. Scale hurts.

- Minimizing our pain and suffering when it comes to sorting is all about minimizing the number of things we have to sort.

The Big Oh!¶

- Computer science, almost never cares about the best case.

- Big-O notation has a particular quirk, which is that it’s inexact by design.

- Because Big-O notation deliberately sheds fine details, what emerges is a schema for dividing problems into different broad classes.

- The existence of any linear-time factors will, in Big-O notation, swamp all constant-time factors.

- Then there is, “factorial time,” O(n!), a class of problems so truly hellish that computer scientists only talk about it when they’re joking"

Breaking outside the Quadratic Barrier¶

- In 1936, IBM began producing a line of machines called “collators” that could merge two separately ordered stacks of cards into one.

- Sorting two cards is simple: just put the smaller one on top. And given a pair of two-card stacks, both of them sorted, you can easily collate them into an ordered stack of four. Repeating this trick a few times, you’d build bigger and bigger stacks, each one of them already sorted.

- Mergesort is as important in the history of sorting as sorting in the history of computing.

- With a time complexity of O(n log n), known as “linearithmic”

- To say that linearithmic complexity is an improvement on quadratic complexity is a titanic understatement.

- It is rather a humongous jump hidden behind the simple veil of O(n^2) and O(n log n)

Bucket Sort - sorting without item-to-item comparisons.¶

- sometimes sorting can be done without any item-to-item comparisons at all.

- These two principles, taken together, allow for rough practical sorts in faster than linearithmic time.

- This is beautifully demonstrated by an algorithm known as Bucket Sort.

- e.g. Preston Sort

- As long as the number of buckets is relatively small compared to the number of items, Big-O notation will round that to O(n), or linear time.

- a good strategy is to Bucket Sort until you get down to small enough piles that Insertion Sort is reasonable, or to have a Mergesort pizza party.

But, should you be sorting?¶

- Should you be sorting in the first place?

- The effort expended on sorting materials is just a preemptive strike against the effort it’ll take to search through them later.

- Sorting something that you will never search is a complete waste; searching something you never sorted is merely inefficient.

- Sorting is done by machines ahead of time, before the results are needed, and searching is done by users for whom time is of the essence.

-

As the cost of searching drops, sorting becomes less valuable.

The hazards of mess and the hazards of order are quantifiable and that their costs can be measured in the same currency: time

-

Sometimes mess is more than just the easy choice. It’s the optimal choice.

Is bubble sort still used anywhere?¶

- We humans sort more than our data, more than our possessions. We sort ourselves.

- In computer science unnecessary comparisons are always bad, a waste of time and effort.

- But in sports that’s far from the case. In many respects, after all, the games themselves are the point.

- Bubble Sort's very inefficiency—moving items only one position at a time—makes it fairly robust against noise, far more robust than faster algorithms like Mergesort, in which each comparison potentially moves an item a long way

- Comparison Counting Sort. In this algorithm, each item is compared to all the others.

- Without this, we can get a single best winner out of all in sports, but we wouldn't be able to say that the 2nd, 3rd ... are absolute 2nd, 3rd, etc.

- The biggest problem in Single Elimination, as we’ve said, would seem to be a scenario where the first team that gets eliminated by the winning team is actually the second-best team overall

Displacement¶

- Displacement happens when an animal uses its knowledge of the hierarchy to determine that a particular confrontation simply isn’t worth it.

- Dominance hierarchies are ultimately information hierarchies.

- Having a benchmark—any benchmark—solves the computational problem of scaling up a sort.

4. Caching¶

Forget about it.

- In the practical use of our intellect, forgetting is as important a function as remembering.

The Memory Hierarchy¶

- The smaller memory “automatically accumulates to itself words that come from a slower main memory, and keeps them available for subsequent use without it being necessary for the penalty of main memory access to be incurred again.”

- The idea of keeping around pieces of information that you refer to frequently is so powerful that it is used in every aspect of computation.

- There comes a time when for every addition of knowledge you forget something that you knew before. It is of the highest importance, therefore, not to have useless facts elbowing out the useful ones.

- Sherlock Holmes

Clairvoyance¶

- I like this word enough that I think it deserves one complete subheading.

- Clairvoyance - the supposed faculty of perceiving things or events in the future or beyond normal sensory contact.

- Basically, knowing it all before it would happen.

- A Clairvoyant algorithm: An algorithm informed by data from the future.

- Why is Clairvoyance important?

- Because it gives us the optimal state, the optimal algorithm to compare with for the algorithms we make.

- How good are our algorithms as compared to a solution which would have been perfect?

- “If you know the sequence ahead of time,” says Tarjan, who splits his time between Princeton and Silicon Valley, “you can customize the data structure to minimize the total time for the entire sequence.

- That’s the optimum offline algorithm: God’s algorithm if you will, or the algorithm in the sky.

Eviction and Clairvoyance¶

- Since the [cache] can only be a fraction of the size of the main memory, words cannot be preserved in it indefinitely, and there must be wired into the system an algorithm by which they are progressively overwritten.

- the goal of cache management is to minimize the number of times you can’t find what you’re looking for in the cache and must go to the slower main memory to find it.

- aka, page faults or cache misses

- Bélády’s Algorithm is an instance of what computer scientists call a “clairvoyant” algorithm: one informed by data from the future.

The LRU Principle¶

- LRU principle is effective because of something computer scientists call “temporal locality”.

- Windows and Mac OS task switching interfaces: when you press Alt + Tab or Command + Tab, you see your applications listed in order from the most recently to the least recently used.

- LRU teaches us that the next thing we can expect to need is the last one we needed, while the thing we’ll need after that is probably the second-most-recent one and the last thing we can expect to need is the one we’ve already gone longest without.

The Law of Large Numbers¶

- As the number of independent, identically distributed trials of an experiment increases, the sample mean (or average) of the results will converge towards the expected value.

Amazon's Cache Patent¶

- "Anticipatory package shipping" patent.

- Amazon could possibly ship you something before you bought it, how? Through caching.

- Their patent is actually for shipping items that have been recently popular in a given region to a staging warehouse in that region—like having their own CDN for physical goods.

- Anticipating the purchases of individuals is challenging, but when predicting the purchases of a few thousand people, #The Law of Large Numbers kicks in.

Caching at Home¶

- When you are deciding what to keep and what to throw away, LRU is potentially a good principle to use—much better than FIFO.

- Make sure things are in whatever cache is closest to the place where they’re typically used.

- Be it keys placed right at the entrance side of the door, shoes in the shoe rack, formal shoes in cupboard and rain shoes in some corner of the house.

- Having a cache is efficient, but having multiple levels of caches—from smallest and fastest to largest and slowest—can be even better

- A valet stand is essentially a one-outfit closet, a compound hanger for jacket, tie, and slacks—the perfect piece of hardware for your domestic caching needs

Filing and Piling¶

- if you follow the LRU principle—where you simply always put an item back at the very front of the list—then the total amount of time you spend searching will never be more than twice as long as if you’d known the future (The God's Algorithm).

The Left Side insertion Rule (Noguchi Filing System)¶

- The left-side insertion rule, Noguchi specifies, has to be followed for old files as well as new ones:

- Every time you pull out a file to use its contents, you must put it back as the leftmost file when you return it to the box.

- The most recently accessed files are thus the fastest to find.

- The Noguchi Filing System clearly saves time when you’re replacing something after you’re done using it.

Is that pile of papers on your desk actually good for you?¶

- What might appear to others to be an unorganized mess is, in fact, a self-organizing mess.

- Tossing things back on the top of the pile is the very best you can do, shy of knowing the future.

- there’s a very different reason why you don’t need to organize it. You already have.

The Forgetting Curve¶

- The number of items one can accurately recall goes down as time passes

- A natural way to think about forgetting is that our minds simply run out of space.

- Anderson’s new account of human memory states that the problem might be not one of storage, but of organization.

- his theory, the mind has essentially infinite capacity for memories, but we have only a finite amount of time in which to search for them.

- The question was straightforward: what patterns characterize the way the world itself “forgets”, the way that events and references fade over time?

- Anderson and Schooler analyzed three human environments: headlines from the New York Times, recordings of parents talking to their children, and Anderson’s own email inbox.

- Reality itself has a statistical structure that mimics the Ebbinghaus curve.

- Caching shows us that memory involves unavoidable tradeoffs, and a certain zero-sumness

Why is it necessary to forget though?¶

- Because, it is just too expensive to maintain access to an unbounded number of items.

- Unavoidably, the larger a memory is, the more time it takes to search for and extract a piece of information from it.

- It’s a wonder that the two of us—or anyone—can mentally keep up at all.

-

The old can mock the young for their speed: “It’s because you don’t know anything yet!”

- Simply knowing more makes things harder when it comes to recognizing words, names, and even letters.

- No matter how good your organization scheme is, having to search through more things will inevitably take longer.

It’s not that we’re forgetting; it’s that we’re remembering. We’re becoming archives.

-

A lot of what is currently called decline is simply learning.

- If you happen to have tough time as you grow older remembering names, this is a function of the amount of stuff that we have to sift through.

- This is not a sign of a failing mind.

- ~ Michael Ramscar [The Myth of Cognitive Decline]

- The disproportionate occasional lags in information retrieval are a reminder of just how much we benefit the rest of the time by having what we need at the front of our minds.

- The length of a delay is partly an indicator of the extent of your experience. The effort of retrieval is a testament to how much you know. And the rarity of those lags is a testament to how well you’ve arranged it: keeping the most important things closest to hand.

5. Scheduling¶

"How we spend our days is, of course, how we spend our lives." ~ Annie Dillard

We are what we repeatedly do ~ Aristotle

- Getting Things Done advocates a policy of immediately doing any task of two minutes or less as soon as it comes to mind.

- Eat That Frog! advises beginning with the most difficult task and moving toward easier and easier things.

Spending time becomes a science¶

time management seems a problem as old as time itself.

How to use a washing machine and a dryer efficiently?¶

- Let's say you have n loads for laundry, you have a washing machine and a dryer.

- You can put in one load at a time in a machine.

- Different loads will take different times in each device.

- A heavily soiled load might take longer to wash but the usual time to dry, a large load might take less time to wash and more time to dry.

- You should begin by finding the single step that takes the least amount of time.

- If the shortest step involves the washer, do that job first.

- If the shortest step involves the dryer, do it last.

- By having the shortest washing times at the start, and the shortest drying times at the end, you maximize the amount of overlap—when the washer and dryer are running simultaneously.

- The scheduling problem that matters the most involves just one machine: ourselves.

Handling Deadlines¶

- If you have only a single machine, and you’re going to do all of your tasks, then any ordering of the tasks will take you the same amount of time.

- The first lesson in single-machine scheduling is make your goals explicit.

- Think of the “maximum lateness” of a set of tasks as the lateness of whatever task has gone furthest past its due date.

Earliest Due Date¶

- If you’re concerned with minimizing maximum lateness, then the best strategy is to start with the task due soonest and work your way toward the task due last.

- It is optimal assuming that you’re only interested in one metric in particular: reducing your maximum lateness.

Moore's Algorithm¶

- Want to minimize the number of foods that spoil instead?

- Moore’s Algorithm says that we start out just like with Earliest Due Date—by scheduling out our produce in order of spoilage date, earliest first, one item at a time.

- However, as soon as it looks like we won’t get to eating the next item in time, we pause, look back over the meals we’ve already planned, and throw out the biggest item (that is, the one that would take the most days to consume).

- What this means is throwing away a large watermelon that might take 10 servings in order to attempt everything that comes after it.

- We repeat this pattern, laying out the foods by spoilage date and tossing the largest already scheduled item any time we fall behind.

Getting Things Done¶

Do the difficult things while they are easy and do the great things while they are small.

Shortest Processing Time¶

- always do the quickest task you can.

- It is compatible with the recommendation in Getting Things Done to immediately perform any task that takes less than two minutes.

- May ease your mind by shrinking the number of outstanding tasks as quickly as possible.

Picking Our Problems¶

A man with one watch knows what time it is; a man with two watches is never sure.

- Computer science can provide us with optimal algorithms for various metrics available in single machine scheduling.

- Our tasks, and our life when we think of is similar to single machine scheduling.

- In many cases we get to decide what problem we want to be solving.

- This offers a radical way to rethink procrastination, the classic pathology of time management.

- We typically think of it as a faulty algorithm.

- What if it’s exactly the opposite? What if it’s an optimal solution to the wrong problem?

- Staying focused not just on getting things done but on getting weighty things done—doing the most important work you can at every moment—sounds like a surefire cure for procrastination.

Procrastination as a DOS Attack¶

- When we procrastinate, we tend to do things and tasks which do not matter as much as the one we should be doing.

- But the tasks we end up doing seem easier to do, more comfortable.

- When a DOS attack happens on a system, similar thing occurs, the system is overwhelmed by trivial things it has to do and the important things get lost in the chaos.

- We typically associate procrastination with laziness or avoidance behavior.

- but it can just as easily spring up in people (or computers) who are trying earnestly and enthusiastically to get things done as quickly as possible.

Pre-castination¶

- The tendency to complete tasks as quickly as possible, even if it means doing them sooner than necessary or at the expense of extra effort or potentially lower quality work. (source ~ Google)

- The hastening of sub-goal completion, even at the expense of extra physical effort.

- Putting off work on a major project by attending instead to various trivial matters can likewise be seen as "the hastening of subgoal completion."

The Low-priority tasks that DOS us,¶

- Working on a computer brings with it an additional hazard when it comes to being conscious and deliberate about our scheduling metrics.

- The user interface may subtly (or not so subtly) force its own metric upon us.

- Modern smartphone user, for instance, is accustomed to seeing “badges” hovering over application icons, ominous numbers in white-on-red signalling exactly how many tasks each particular app expects us to complete.

Weighted Scheduling - Doing the harder task first¶

- ~ Sounds like Eat that Frog right?

- Staying focused not just on getting things done but on getting weighty things done—doing the most important work you can at every moment—sounds like a surefire cure for procrastination.

Starvation: Hazard of Priority Inversion¶

- A classic scheduling hazard called priority inversion.

- What happens in a priority inversion is that a low-priority task takes possession of a system resource (access to a database, let’s say) to do some work, but is then interrupted partway through that work by a timer, which pauses it and invokes the system scheduler.

- The Solution? Priority Inheritance

Priority inheritance¶

- If a low-priority task is found to be blocking a high-priority resource, well, then all of a sudden that low-priority task should momentarily become the highest-priority thing on the system, “inheriting” the priority of the thing it’s blocking.

-

The logic behind this is simple,

“Things which matter most must never be at the mercy of things which matter least”.

-

Sometimes that which matters most cannot be done until that which matters least is finished, so there’s no choice but to treat that unimportant thing as being every bit as important as whatever it’s blocking.

Precedence Constraint¶

- When a certain task can’t be started until another one is finished, scheduling theorists call that a precedence constraint.

The issue with Optimal Scheduling¶

-

Scheduling problems are intractable in nature.

- There is no sure way to know the best answer in finite time.

not every problem that can be formally articulated has an answer.

- There is no sure way to know the best answer in finite time.

-

Scheduling problems aren’t unanswerable, per se—but it may simply be the case that there’s no straightforward algorithm that can find you the optimal schedule in a reasonable amount of time.

- The researchers found was that even the subtlest change to a scheduling problem often tips it over the fine and irregular line between tractable and intractable.

- For Example

- Moore’s Algorithm minimizes the number of late tasks (or rotten fruits) when they’re all of equal value—but if some are more important than others, the problem becomes intractable and no algorithm can readily provide the optimal schedule.

Drawing Borders of Scheduling Theory¶

- Recent survey showed that the status of about 7% of all problems is still unknown, scheduling’s terra incognita.

- Of the remaining 93%, only 9$ can be solved efficiently.

- 84% have been proven intractable.

- Most scheduling problems admit no ready solution.

Preemption and Uncertainty¶

The best time to plant a tree is twenty years ago. The second best time is now.

- One twist that can make scheduling life easier: being able to stop one task partway through and switch to another

- When a task’s starting time comes, compare that task to the one currently under way. If you’re working by #Earliest Due Date and the new task is due even sooner than the current one, switch gears; otherwise stay the course.

- Even if you don’t know when tasks will begin, #Earliest Due Date and #Shortest Processing Time are still optimal strategies, able to guarantee you (on average) the best possible performance in the face of uncertainty.

- If assignments get tossed on your desk at unpredictable moments, the optimal strategy for minimizing maximum lateness is still the preemptive version of #Earliest Due Date — switching to the job that just came up if it’s due sooner than the one you’re currently doing, and otherwise ignoring it.

- The weighted version of #Shortest Processing Time is a pretty good candidate for best general-purpose scheduling strategy in the face of uncertainty.

- Each time a new piece of work comes in, divide its importance by the amount of time it will take to complete. If that figure is higher than for the task you’re currently doing, switch to the new one; otherwise stick with the current task.

-

The Good thing about this,

- Under certain assumptions it minimizes not just the sum of weighted completion times, as we might expect, but also the sum of the weights of the late jobs and the sum of the weighted lateness of those jobs

- When the future is foggy, it turns out you don’t need a calendar—just a to-do list.

-

The bad thing, Context Switch!

Preemption isn't free: Context Switch¶

Programmers don’t talk because they must not be interrupted.

- To synchronize with other people (or their representation in telephones, buzzers and doorbells) can only mean interrupting the thought train. Interruptions mean certain bugs. You must not get off the train.

- People and machines face a similar challenge.

- The machine that is doing the scheduling and the machine being scheduled are one and the same.

- Which makes straightening out your to-do list an item on your to-do list—needing, itself, to get prioritized and scheduled.

- Humans clearly have context-switching costs too.

- 21 minutes, it takes us 21 minutes to get back and get focused on a task that we left for a distraction or notification.

- Anyone you interrupt more than a few times an hour is in danger of doing no work at all.

- Scheduling expert Kirk Pruhs, from University of Pittsburgs says,

- If it’s less than an hour I’ll just do errands instead, because it’ll take me the first thirty-five minutes to really figure out what I want to do and then I might not have time to do it

- The truth is, there is always going to be overhead.

- The more you take on, the more overhead there is.

- At its nightmarish extreme, this turns into a phenomenon called thrashing.

Thrashing¶

- thrashing: a system running full-tilt and accomplishing nothing at all.

- Any situation where the system grinds to a halt because it’s entirely preoccupied with metawork

- Under certain conditions a dramatic problem “shows up as you add more jobs to the multiprogramming mix.

- At some point you pass a critical threshold—unpredictable exactly where it is, but you’ll know it when you get there—and all of a sudden the system seems to die.

- The presence of one additional program has caused a complete collapse of service.

- Here scheduling theory intersects caching theory.

- The whole idea of caches is to keep the “working set” of needed items available for quick access.

- The caches are warm for the current workload, and when you context switch you pretty much invalidate all caches.

- At the extreme, a program may run just long enough to swap its needed items into memory, before giving way to another program that runs just long enough to overwrite them in turn.

- In a thrashing state, you’re making essentially no progress.

- So, doing tasks in the wrong order is better than doing nothing at all.

- Along with considerations of memory, one of the biggest sources of metawork in switching contexts is the very act of choosing what to do next

Thrashing in Real Life¶

- If you’ve ever had a moment where you wanted to stop doing everything just to have the chance to write down everything you were supposed to be doing, but couldn’t spare the time, you’ve thrashed.

Ounce of prevention is worth a pound of cure

- Another way to avert thrashing before it starts is to learn the art of saying no.

Interrupt Coalescing¶

- Part of what makes real-time scheduling so complex and interesting is that it is fundamentally a negotiation between two principles that aren’t fully compatible.

- Responsiveness and throughput: how quickly you can respond to things, and how much you can get done overall

-

You must make the responsiveness/throughput tradeoff yourself.

The best strategy for getting things done might be, paradoxically, to slow down.

-

With enough programs running, a task’s slice would shrink to the point that the system was spending the entire slice on context switching, before immediately context-switching again to the next task.

- Establishing a minimum amount of time to spend on any one task helps to prevent a commitment to responsiveness from obliterating throughput entirely.

- If the minimum amount of time slice is longer than the time it takes to context-switch, the system can never enter a state where context switching is the only thing it is doing.

- So instead, the rule that computer operating systems follow when deciding how long they can afford to dedicate themselves to some task is simple: as long as possible without seeming jittery or slow to the user.

- If you find yourself doing a lot of context switching because you’re tackling a heterogeneous collection of short tasks, you can also employ another idea from computer science: interrupt coalescing.

Interrupt Coalescing¶

- Don't pay attention to minor tasks as soon as they arrive, instead club them together (Coalesce them) and take care of them all in one go.

- Computers themselves do something like this: they wait until some fixed interval and check everything, instead of context-switching to handle separate, uncoordinated interrupts from their various subcomponents.

- Examples of Interrupt Coalescing in life,

- At human scale, we get interrupt coalescing for free from the postal system, just as a consequence of their delivery cycle.

- In academia, holding office hours is a way of coalescing interruptions from student.

- This is what computer scientists call batch processing—the alternative is swapping in and out. I don’t swap in and out.

- ~ Donald Knuth

6. Bayes's Rule¶

-

Predicting the Future.

All human knowledge is uncertain, inexact, and partial.

-

Is it worthwhile to wait—and if so, how long should you do so before giving up?

- we often have to make an inference from the smallest amount of data we could possibly have: a single observation.

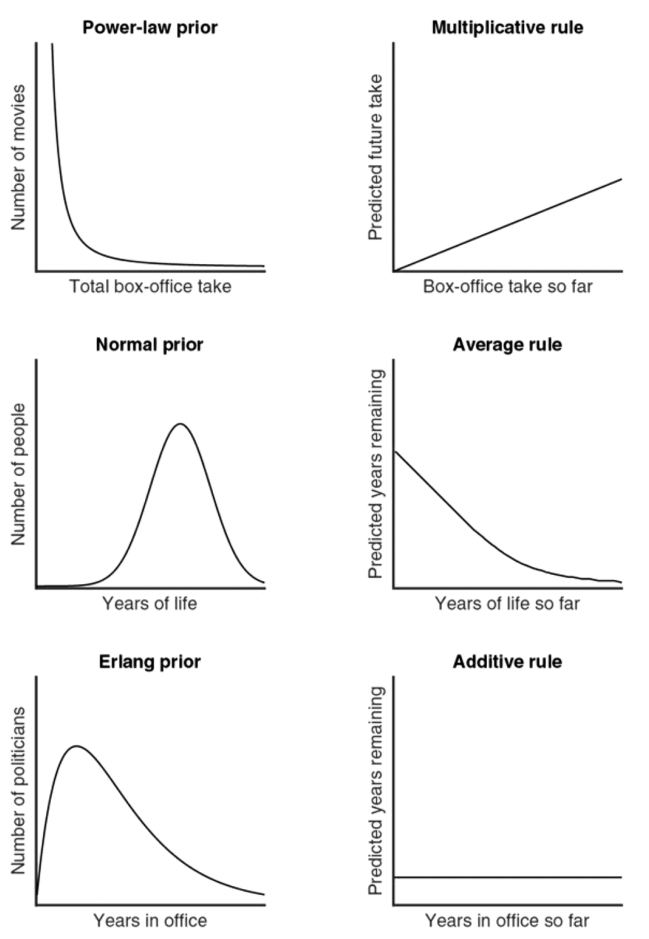

different graphs for different laws and rules of distributtion here

different graphs for different laws and rules of distributtion here

Bayes's Argument in Crux¶

- Reasoning forward from hypothetical pasts lays the foundation for us to then work backward to the most probable one.

- Bayes had found a way to compare the relative probability of one hypothesis to another.

Laplace's Law¶

- Laplace's law states that for any possible drawing of w winning tickets in n attempts, the expectation is simply the number of wins plus one, divided by the number of attempts plus two: (w+1)⁄(n+2).

- This law, called the #Laplace's Law is easy to apply in any situation where you need to assess the chances of an event based on its history.

The Copernican Principle¶

- Unless we know better we can expect to have shown up precisely halfway into the duration of any given phenomenon.

- The Copernican Principle, results in a simple algorithm that can be used to make predictions about all sorts of topics.

- The Copernican Principle suggests that there might be a dramatically simpler and cheaper alternative.

- Simply displaying how long it’s been since the previous bus arrived at that stop offers a substantial hint about when the next one will.

Bayes x Copernican¶

- The Copernican Principle is exactly what results from applying Bayes’s Rule using what is known as an uninformative prior.

- “uniform prior,” which considers every proportion of winning tickets to be equally likely

- The Copernican Principle emerges: if we want to predict how long something will last, and have no other knowledge about it whatsoever, the best guess we can make is that it will continue just as long as it’s gone on so far.

Power-law distributions¶

- These are also known as “scale-free distributions” because they characterize quantities that can plausibly range over many scales.

- Example, the US population make less than the mean income, but the top 1% make almost ten times the mean. And the top 1% of the 1% make ten times more than that.

- For any power-law distribution, Bayes’s Rule indicates that the appropriate prediction strategy is a Multiplicative Rule: multiply the quantity observed so far by some constant factor.

- For an uninformative prior, that constant factor happens to be 2

- The larger the value of that single data point, the larger the scale we’re probably dealing with, and vice versa.

- In a power-law distribution, the longer something has gone on, the longer we expect it to continue going on.

Average Rule¶

- Average Rule: use the distribution’s “natural” average—its single, specific scale—as your guide.

- Something normally distributed that’s gone on seemingly too long is bound to end shortly; but the longer something in a power-law distribution has gone on, the longer you can expect it to keep going.

- Distributions that yield the same prediction, no matter their history or current state, are known to statisticians as “memoryless.”

Small Data and the Mind¶

- The three prediction rules—Multiplicative, Average, and Additive—are applicable in a wide range of everyday situations.

- Small data is big data in disguise.

- The reason we can often make good predictions from a small number of observations—or just a single one—is that our priors (prior data/ knowledge) are so rich.

- Good predictions require good priors.

- The implications of this? Our judgments betray our expectations, and our expectations betray our experience.

- The ability to resist temptation may be, at least in part, a matter of expectations rather than willpower.

- Learning self-control is important, but it’s equally important to grow up in an environment where adults are consistently present and trustworthy.

He is careful of what he reads, for that is what he will write. He is careful of what he learns, for that is what he will know. ~ Annie Dillard

- As a rule, when something surprises us, it ought to surprise us, and when it doesn’t, it ought not to.

- What we talk about isn’t what we experience—we speak chiefly of interesting things, and those tend to be things that are uncommon.

- The representation of events in the media does not track their frequency in the world.

- If you want to be a good intuitive Bayesian—if you want to naturally make good predictions, without having to think about what kind of prediction rule is appropriate—you need to protect your priors

Overfitting¶

- ~When to think less

- The question of how hard to think, and how many factors to consider, is at the heart of a knotty problem that statisticians and machine-learning researchers call “overfitting".

There’s a wisdom to deliberately thinking less.

The Case Against Complexity¶

- Every decision is a kind of prediction

- About how much you’ll like something you haven’t tried yet, about where a certain trend is heading, about how the road less travelled (or more so) is likely to pan out.

- Every prediction, crucially, involves thinking about two distinct things:

- what you know and what you don’t.

- Including more factors in a model will always, by definition, make it a better fit for the data we have already.

- But a better fit for the available data does not necessarily mean a better prediction.

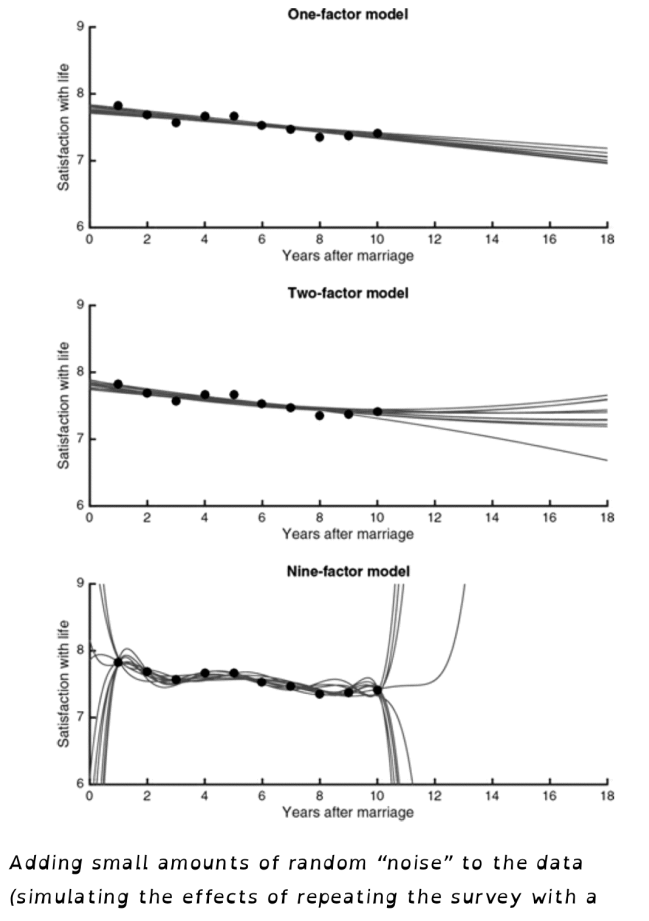

model ovverfitting consisting of two factor model, one factor model and nine factor model for years after marriage.

model ovverfitting consisting of two factor model, one factor model and nine factor model for years after marriage.- If the study were repeated with different people, producing slight variations on the same essential pattern, the one- and two-factor models would remain more or less steady—but the nine-factor model would gyrate wildly from one instance of the study to the next.

- This is what statisticians call overfitting.

The Idolatry of Data¶

- Idolatry (To worship the idols)

- Overfitting is a kind of idolatry of data, a consequence of focusing on what we’ve been able to measure rather than what matters.

- Considering more and more factors and expending more effort to model them can lead us into the error of optimizing.

Overfitting everywhere¶

- Once you know about overfitting, you see it everywhere.

- Simple Example?

- How can it be that the foods that taste best to us are broadly considered to be bad for our health, when the entire function of taste buds, evolutionarily speaking, is to prevent us from eating things that are bad?

- The answer is that taste is our body’s proxy metric for health.

- Fat, sugar, and salt are important nutrients, and for a couple hundred thousand years, being drawn to foods containing them was a reasonable measure for a sustaining diet.

- Beware: when you go to the gym to work off the extra weight from all that sugar, you can also risk overfitting fitness.

- Overfitting the signals—adopting an extreme diet to lower body fat and taking steroids to build muscle, perhaps—can make you the picture of good health, but only the picture.

It really is true that the company will build whatever the CEO decides to measure

Training Scars¶

- Training Scars reflect the fact that it is possible to overfit one's own preparation.

- Police men who were trained to lower their gun at a gun-range after firing three shots did so in real-life situations because of practice.

- This resulted in injuries and even deaths!

- In one situation the police man grabbed the gun from the assailant and then instinctively handed it right back as he had done time and time again with his trainers in the practice.

Detecting Overfitting: Cross Validation¶

- Research in machine learning has yielded several concrete strategies for detecting overfitting, and one of the most important is what’s known as Cross-Validation.

Cross Validation¶

- Cross-Validation means assessing not only how well a model fits the data it’s given, but how well it generalizes to data it hasn’t seen.

- Aside from withholding some of the available data points, it is also useful to consider testing the model with data derived from some other form of evaluation entirely.

How to Combat Overfitting?¶

If you can’t explain it simply, you don’t understand it well enough.

- The fact that the human brain burns about a fifth of humans’ total daily caloric intake is a testament to the evolutionary advantages that our intellectual abilities provide us with.

Penalizing Complexity¶

- overfitting is a symptom of being too sensitive to the actual data we’ve seen.

- The solution then is simple,

- We must balance our desire to find a good fit against the complexity of the models we use to do so.

- When it comes to portfolio management, it turns out that unless you’re highly confident in the information you have about the markets, you may actually be better off ignoring that information altogether

- the study of heuristics shows that less information, computation, and time can in fact improve accuracy

Occam's Razor Principle¶

- the Occam’s razor principle, which suggests that, all things being equal, the simplest possible hypothesis is probably the correct one

The Weight of History¶

- Some of the most fundamental domains of human life, such as the question of what we should put in our bodies, seem curiously to be the ones most dominated by short-lived fads.

- As a species, being constrained by the past makes us less perfectly adjusted to the present we know but helps keep us robust for the future we don’t.

Early Stopping¶

- In machine learning, the advantages of moving slowly emerge most concretely in a regularization technique known as Early Stopping.

- The effectiveness of regularization in all kinds of machine-learning tasks suggests that we can make better decisions by deliberately thinking and doing less.

- Beyond a certain point thinking more about a problem is not only going to be a waste of time and effort—it will lead us to worse solutions.

When to Think Less¶

- How early to stop depends on the gap between what you can measure and what really matters.

- The greater the uncertainty, the bigger the gap between what you can measure and what matters, the more you should watch out for overfitting.

- When you’re truly in the dark, the best-laid plans will be the simplest.

Relaxation¶

- ~ Letting it Slide.

The Difficulty of Optimization¶

Constrained Optimization Problem¶

- How to find the single best arrangement of a set of variables, given particular rules and a score keeping measure.

Defining Difficulty¶

- The Cobham–Edmonds thesis: an algorithm should be considered “efficient” if it runs in what’s called “polynomial time”—that is, \(O(n^2)\), \(O(n^3)\), or in fact n to the power of any number at all.

- Any problem we don’t know how to solve in polynomial time, on the other hand, is considered “intractable.”

- “When the problem is hard, it doesn’t mean that you can forget about it, it means that it’s just in a different status. It’s a serious enemy, but you still have to fight it.”

Just Relaxing¶

The perfect is the enemy of the good ~ Voltaire

Constraint Relaxation¶

- A life saver in disguise for sure!

- Constraint Relaxation: to make the intractable tractable, to make progress in an idealized world that can be ported back to the real one.

- In this technique, researchers remove some of the problem’s constraints and set about solving the problem they wish they had.

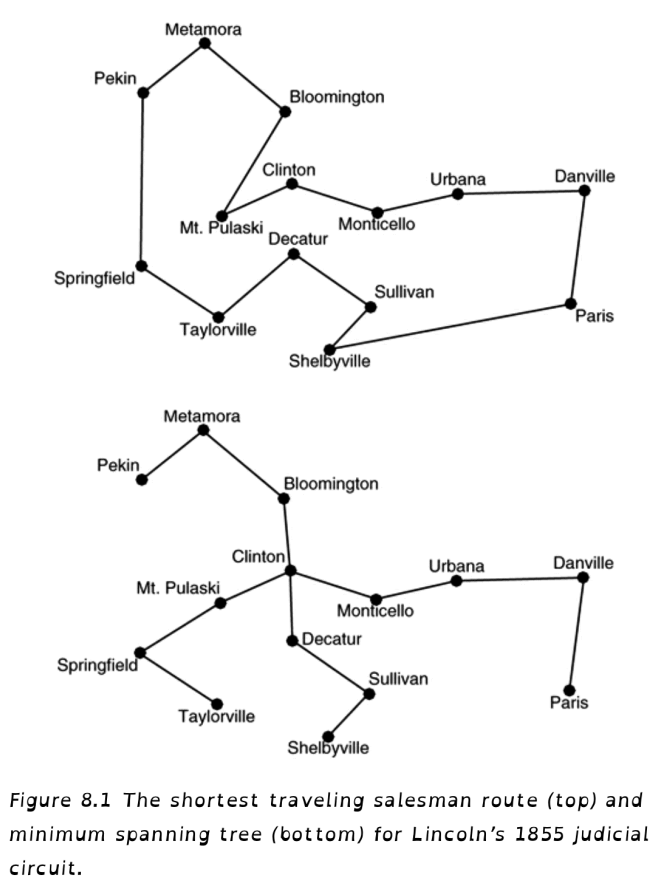

- In the travelling salesman problem it turns out that the minimum spanning tree is actually one of the best starting points from which to begin a search for the real solution.

TSP graph and min spanning tree.

TSP graph and min spanning tree.- What relaxation cannot do is offer you a guaranteed shortcut to the perfect answer.

- But, if we’re willing to accept solutions that are close enough, then even some of the hairiest problems around can be tamed with the right techniques.

Continuous Relaxation¶

- A lot of problems become computationally hard, when you can’t do half of this and half of that.

- Researchers confronted with a discrete optimization problem might gaze at those strategies enviously—but they also can do more than that.

- They can try to relax their discrete problem into a continuous one and see what happens.

- Continuous Relaxation with rounding will give us an easily computed solution that’s not half bad.

- It’s mathematically guaranteed to get everyone you want to the party while sending out at most twice as many invitations as the best solution obtainable by brute force.

- Continuous Relaxation is not a magic bullet: it still doesn’t give us an efficient way to get to the truly optimal answers, only to their approximations.

Lagrangian Relaxation¶

- An optimization problem has two parts: the rules and the scorekeeping.

- In Lagrangian Relaxation, we take some of the problem’s constraints and bake them into the scoring system instead.

- Lagrangian Relaxation—where some impossibilities are downgraded to penalties, the inconceivable to the undesirable—enables progress to be made.

Learning To Relax¶

- Constraint Relaxation, simply removes some constraints altogether and makes progress on a looser form of the problem before coming back to reality.

- Continuous Relaxation, turns discrete or binary choices into continua: when deciding between iced tea and lemonade, first imagine a 50–50 “Arnold Palmer” blend and then round it up or down.

- Lagrangian Relaxation, turns impossibilities into mere penalties, teaching the art of bending the rules (or breaking them and accepting the consequences).

- Unless we’re willing to spend eons striving for perfection every time we encounter a hitch, hard problems demand that instead of spinning our tires we imagine easier versions and tackle those first.

Randomness¶

- Sometimes the best solution to a problem is to turn to chance rather than trying to fully reason out an answer.

There is no such thing as absolute certainty, but there is assurance sufficient for the purposes of human life.

Sampling¶

- Laplace’s proposal pointed to a profound general truth: when we want to know something about a complex quantity, we can estimate its value by sampling from it.

The test of a first-rate intelligence is the ability to hold two opposing ideas in mind at the same time and still retain the ability to function. ~ F. Scott Fitzgerald

Monte Carlo Method¶

- Replacing exhaustive probability calculations with sample simulations.

Myopic Algorithm¶

- One that shortsightedly takes the best thing available every step of the way.

Randomized Algorithms¶

- It is much easier to multiply primes together than to factor them back out.

- With big enough primes—say, a thousand digits—the multiplication can be done in a fraction of a second while the factoring could take literally millions of years;

- This makes for what is known as a “one-way function.”

- The primes used in modern cryptography are hundreds of digits long

- We’re so used to mathematics being a realm of certainty that it’s jarring to think that a number could be “probably prime” or “almost definitely prime.

- Modern cryptographic systems, the ones that encrypt Internet connections and digital transactions, are tuned for a false positive rate of less than one in a million billion billion.

- In other words, that’s a decimal that begins with twenty-four zeros—less than one false prime for the number of grains of sand on Earth.

- As Harvard’s Michael Mitzenmacher puts it, “What we’re going to do is come up with an answer which saves you in time and space and trades off this third dimension: error probability."

Learning From Algorithms¶

Hill Climbing¶

- Even if you’re in the habit of sometimes acting on bad ideas, you should always act on good ones.

Metropolis Algorithm¶

- Your likelihood of following a bad idea should be inversely proportional to how bad an idea it is.

Simulated Annealing¶

- You should front-load randomness, rapidly cooling out of a totally random state, using ever less and less randomness as time goes on, lingering longest as you approach freezing.

Networking¶

How we connect

-

The foundation of human connection is protocol

- A shared convention of procedures and expectations, from handshakes and hellos to etiquette, politesse, and the full gamut of social norms.

- Machine connection is no different.

- Protocol is how we get on the same page

What you might call a connection is a consensual illusion between the two endpoints.

-

How do you know your messages are getting through?

Acknowledgement¶

-

One of the first questions for any protocol, human or machine, is, quite simply: how do you know your messages are getting through?

No transmission can be 100 percent reliable. —VINT CERF AND BOB KAHN

-

But the beautiful thing about communication,

- Communication is one of those delightful things that work only in practice; in theory it’s impossible.

Human Communication¶

- Why we don't need reliable, robust protocols for human communications and calls?

-

Simply because using reliable, robust protocols—with all their ACKs and retransmission of lost packets—to transmit the human voice is overkill.

- The humans provide the robustness themselves.

-

How to handle a person or a computer that's unreliable?

- The longer the round-trip time between sender and receiver, the longer it takes a silence to be significant—and the more information can be potentially “in flight” before the sender realizes there’s a problem.

Peter principle¶

the most exciting phrase to hear in science, the one that heralds new discoveries, is not ‘Eureka!’ but ‘That’s funny.’

- Every employee tends to rise to his level of incompetence.

- In a hierarchical organization, anyone doing a job proficiently is promoted for their good work till they reach a rank where they are unable to perform as good as they previously were, and this is where they get stuck for life.

- The result of this?

- Eventually every spot in an organization will come to be filled by someone doing that job badly

- The Solution? AIMD Algorithm.

- The AIMD algorithm can offer just such an approach, since it is explicitly designed to handle the demands of a volatile environment.

- In TCP, as we’ve seen, there’s no such thing as a one-way transmission: without consistent feedback, the sender will slow down almost immediately.

Tail Drop¶

- When a networking buffer fills up, what typically happens is called Tail Drop: an unceremonious way of saying that every packet arriving after that point is simply rejected, and effectively deleted.

- Buffers use delay—known in networking as “latency”—in order to maximize throughput.

- Bbuffer that’s operating permanently full gives you the worst of both worlds: all the latency and none of the give.

Exponential Backoff¶

~ The algorithm of Forgiveness. - "Ilunga means “a person who is ready to forgive any abuse for the first time, to tolerate it a second time, but never a third time" - Communication is an area where randomness becomes essential—indeed, networking wouldn’t be possible without it. - For a transport endpoint embedded in a network of unknown topology and with an unknown, unknowable and constantly changing population of competing conversations, only one scheme has any hope of working—exponential backoff - This elegant approach allows the network to accommodate potentially any number of competing signals. - Since the maximum delay length (2, 4, 8, 16…) forms an exponential progression, it’s become known as Exponential Backoff.

- When your computer is trying to reach a website that appears to be down, it uses Exponential Backoff—trying again one second later, again a few seconds after that, and so forth.

- A network can stabilize only if its users pull back at least as fast as the rate at which it is being overloaded.

Better Never than Late¶

- The feeling that one needs to look at everything on the Internet, or read all possible books, or see all possible shows, is bufferbloat.

Game Theory¶

I’m an optimist in the sense that I believe humans are noble and honorable, and some of them are really smart.… I have a somewhat more pessimistic view of people in groups. — STEVE JOBS

- Interacting with others doesn’t have to be a nightmare—although in the wrong game it surely can be.

Recursion¶

- The value of a stock isn’t what people think it’s worth but what people think people think it’s worth.

- We devote our intelligences to anticipating what average opinion expects the average opinion to be.

-

Alan Turing proved in 1936, a computer program can never tell you for sure whether another program might end up calculating forever without end—except by simulating the operation of that program and thus potentially going off the deep end itself.

-

Adopting a strategy that doesn’t require anticipating, predicting, reading into, or changing course because of the tactics of others is one way to cut the Gordian knot of recursion.

Reaching Equilibrium¶

- Mathematicians analyse games to identify a so-called equilibrium: that is, a set of strategies that both players can follow such that neither player would want to change their own play, given the play of their opponent.

- Mathematician John Nash proved in 1951 that every two-player game has at least one equilibrium.

- We always have an option to step out of our opponent’s head and look for the equilibrium, going directly to the best strategy, assuming rational play.

- The object of study in mathematics is truth; the object of study in computer science is complexity.

- From 2005 to 2008, Papadimitriou and his colleagues proved that simply finding Nash equilibria is intractable.